Understanding AI Marking

Caliper uses Anthropic's Claude AI to help you mark assignments faster and more consistently. This guide explains how AI marking works and how to use it effectively.

🎥 Video Tutorial: Complete AI Marking Workflow

Watch this 5-minute walkthrough to see the complete AI marking process in action:

Video chapters:

- 0:00 - Introduction

- 0:30 - Navigating to submissions

- 1:00 - Triggering AI analysis

- 2:00 - Reviewing AI results

- 4:00 - Making teacher adjustments

- 5:30 - Saving final grades

How AI Marking Works

The Process

- Upload: Student submits assignment (PDF, ZIP, etc.)

- Extraction: AI reads text from submission

- Analysis: AI evaluates against rubric criteria

- Scoring: AI assigns points per criterion

- Feedback: AI generates explanatory comments

- Review: You review and adjust if needed

What AI Can Do

✅ Read and understand code, text, and structured content ✅ Evaluate against rubric criteria ✅ Identify strengths and weaknesses ✅ Provide specific, constructive feedback ✅ Score consistently across submissions ✅ Save hours of marking time

What AI Cannot Do

❌ Replace teacher judgment completely ❌ Understand context outside the submission ❌ Grade creative/subjective work perfectly ❌ Read handwritten content (unless scanned clearly) ❌ Execute or test code

Using AI Marking

Step 1: Prepare Your Assignment

Requirements:

- Assignment has a linked rubric

- Rubric criteria are specific and clear

- Submissions are in supported format (PDF preferred)

Step 2: Trigger AI Analysis

- Open submission in marking interface

- Click "AI Mark" button

- Wait for analysis (typically 10-30 seconds)

- Review results in real-time console

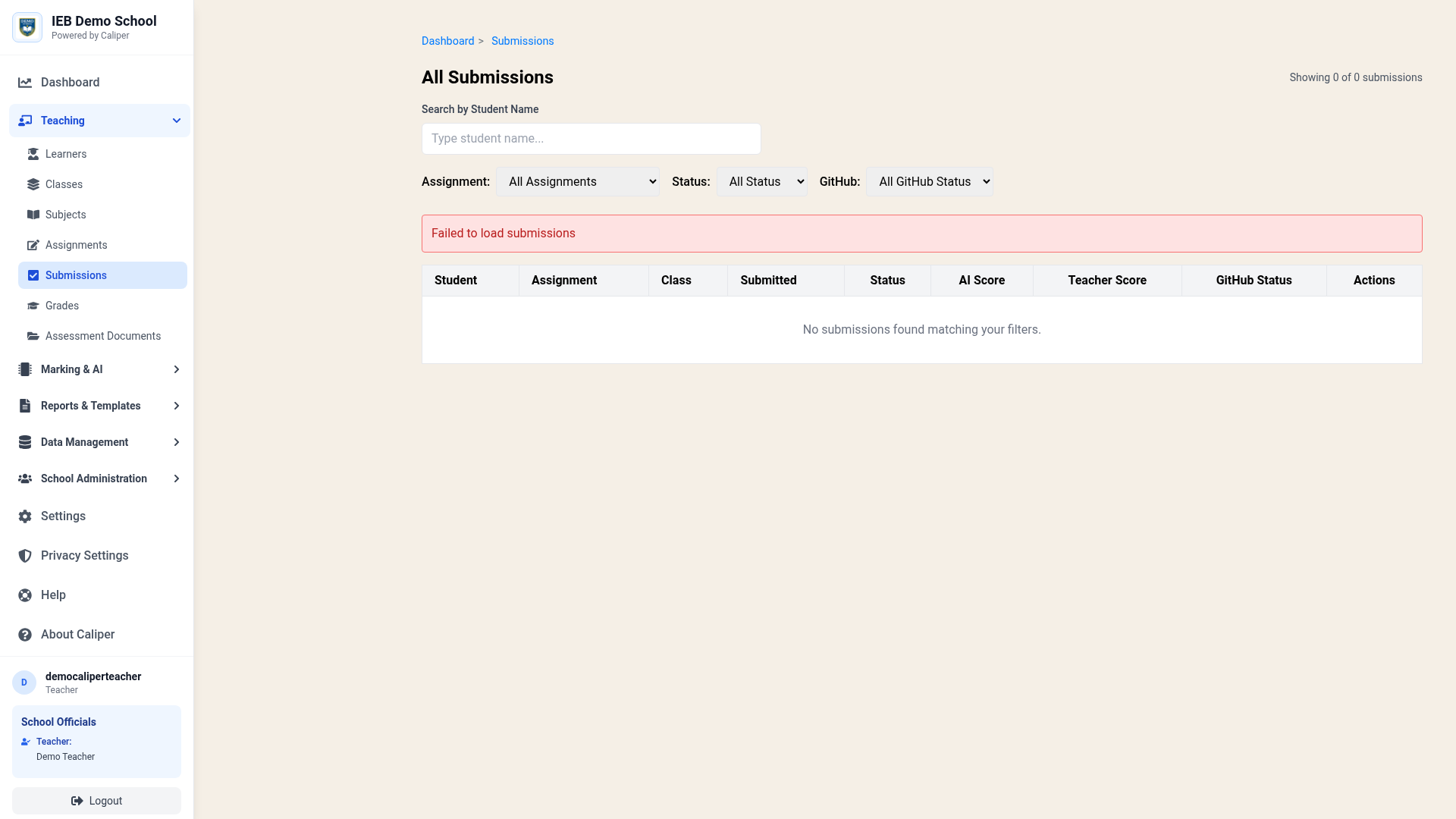

The Submissions page showing all student submissions with AI marking controls

The Submissions page showing all student submissions with AI marking controls

Step 3: Review AI Results

AI Provides:

- Overall suggested score

- Score per rubric criterion

- Detailed feedback

- Highlighted strengths

- Areas for improvement

Your Role:

- Review for accuracy

- Adjust scores if needed

- Add personal comments

- Approve or modify feedback

Step 4: Finalize Marking

- Make any necessary adjustments

- Add teacher-specific comments

- Click "Save Marking"

- Student receives combined AI + teacher feedback

Maximizing AI Accuracy

Write Better Rubrics

Specific Criteria Win:

✅ Good: "Code includes docstrings for all functions with parameters and return type documented"

❌ Vague: "Code is well documented"

Provide Examples:

✅ Good: "Variables use snake_case (e.g., student_name, total_score)"

❌ Vague: "Proper naming conventions"

Set Clear Standards:

✅ Good: "All user inputs validated before processing (check type, range, null)"

❌ Vague: "Input validation present"

Use Preamble & Postamble

Preamble (before marking):

Context: Grade 10 Python assignment

Students know: Variables, functions, basic file I/O

They DON'T know: Classes, decorators, async

Be understanding of minor syntax errors if logic is sound.

Postamble (after marking):

Tone: Encouraging and constructive

Format: Start with positives, then areas to improve

Language: Student-friendly, avoid jargon

Choose Right File Formats

Best → Worst:

- 📄 PDF (code + explanations combined)

- 📦 ZIP (multiple files, preserved structure)

- 📝 Text/Code (single files)

- 🖼️ Images (screenshots of code - less reliable)

- ✍️ Handwritten (scanned, least reliable)

AI Marking Console

Real-Time Status Tracking

Monitor AI analysis progress:

- 📝 PDF Extraction: Reading file content

- 🔄 Preparing Prompt: Setting up rubric

- 🤖 Calling AI: Analyzing submission

- ✅ Processing Response: Generating scores

- 💾 Saving Results: Updating database

Understanding Output

Console Messages:

[INFO] Starting AI marking for submission #123

[INFO] PDF extraction completed: 1,234 characters

[INFO] Prompt prepared with 5 rubric criteria

[INFO] AI API call successful

[INFO] Scores: 42/50 (84%)

[SUCCESS] Marking completed

Common AI Marking Scenarios

Scenario 1: Perfect Match

- AI understands requirements

- Scoring aligns with expectations

- Feedback is accurate and helpful

- Action: Accept AI suggestions, maybe add personal note

Scenario 2: Minor Adjustment Needed

- AI identifies most issues correctly

- One or two scores need tweaking

- Feedback is mostly accurate

- Action: Adjust specific criteria, add clarifying comments

Scenario 3: Significant Override

- AI misses key aspects

- Scores don't reflect actual work quality

- Context not understood properly

- Action: Use teacher override, explain reasoning in comments

Scenario 4: AI Struggles

- Unusual format or content

- Highly creative/abstract work

- Rubric unclear or too broad

- Action: Mark manually, refine rubric for next time

Advanced AI Features

Queue System for Large Documents

For submissions >10 pages:

- Automatically queued for processing

- Background analysis to avoid timeouts

- Status tracking in Submissions page

- Email notification on completion

Batch AI Marking

Mark multiple submissions efficiently:

- Select submissions in Fast Mark

- Click "Batch AI Mark"

- AI processes in background

- Review results in bulk

AI Health Monitoring

Check AI service status:

- Dashboard indicator: Shows AI availability

- Error handling: Automatic retry on failure

- Fallback: Manual marking always available

Troubleshooting

"AI marking failed"

Causes:

- No rubric linked to assignment

- Unsupported file format

- File corrupted or encrypted

- AI service temporary issue

Solutions:

- Verify rubric is linked

- Check file opens normally

- Try re-uploading submission

- Contact support if persists

"Scores seem random"

Causes:

- Vague rubric criteria

- Missing rubric descriptions

- Conflicting requirements

Solutions:

- Make criteria more specific

- Add examples to rubric

- Use preamble for context

- Test with sample submission

"Feedback too generic"

Causes:

- Broad rubric criteria

- No postamble guidance

- Limited submission content

Solutions:

- Break criteria into specifics

- Add postamble for tone/style

- Encourage detailed submissions

"AI missed obvious issues"

Causes:

- Issue not in rubric criteria

- File extraction problem

- Context not provided

Solutions:

- Add criterion for missed aspect

- Check PDF text extraction

- Use preamble to explain context

Best Practices

✅ Do's

- Review every AI result before finalizing

- Refine rubrics based on AI performance

- Use AI as assistant, not replacement

- Provide feedback on AI accuracy (to us!)

- Test rubrics before live assignments

❌ Don'ts

- Don't blindly accept AI scores

- Don't use AI without rubrics

- Don't expect perfection on creative work

- Don't ignore obvious AI errors

- Don't forget to add personal touch

Privacy & Security

Data Handling

- Submissions sent securely to AI service

- No student data stored by AI provider

- Processed in real-time, not retained

- POPIA compliant

AI Service

- Provider: Anthropic Claude

- Location: Secure cloud infrastructure

- Encryption: TLS in transit

- Retention: Zero (not stored)

Next Steps

- Fast Mark Interface - Speed up marking workflow

- Creating Effective Rubrics - Optimize for AI

- PAT Assessment - Multi-phase AI marking

Support

- 📧 Email: info@restrat.co.za

- 📚 Documentation: Teacher Guide

- 🔗 Platform: caliper.restrat.co.za